SLAM – Simultaneous localization and mapping is a method used in robotics and navigation that enables a device to build a map of an unknown environment while simultaneously keeping track of its own location within that map. SLAM technology plays a critical role in autonomous systems, such as self-driving cars, unmanned aerial vehicles (UAVs), autonomous underwater vehicles (AUVs), service robots, and augmented reality (AR) devices. It allows these platforms to navigate complex environments without relying on pre-existing maps or external positioning systems like GPS. SLAM systems combine sensor data, mathematical algorithms, and real-time computing to create accurate maps and track movement. The core challenge lies in solving two problems at once: figuring out where the system is and what the surroundings look like.

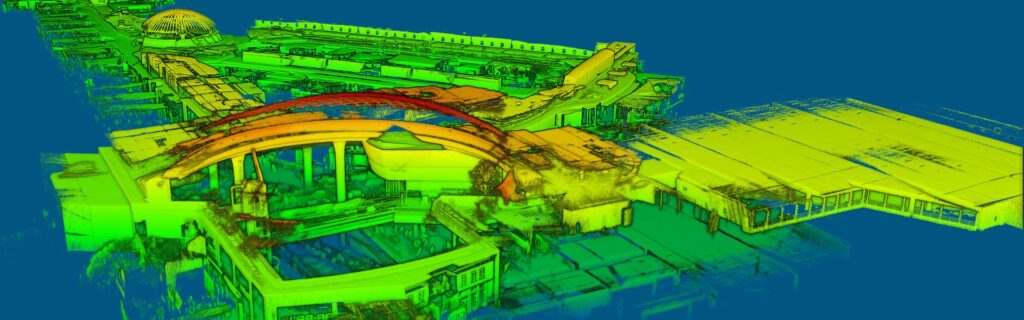

SLAM systems typically use a variety of sensors to gather environmental data. Common sensor inputs include lidar, cameras (monocular, stereo, or RGB-D), radar, and inertial measurement units (IMUs). These sensors collect information about distances to nearby objects, visual features in the environment, and the system’s motion. A key component of this system is sensor fusion, which integrates data from multiple sources to increase accuracy and robustness. Visual SLAM, for example, uses camera images to detect features such as edges and corners in the surroundings. Lidar-based SLAM relies on laser scans to generate point clouds of the environment. The choice of sensor depends on the specific application, operating conditions, and performance requirements.

The SLAM algorithm continuously estimates the position of the device and updates the map using a process known as recursive state estimation. This involves probabilistic methods such as the Kalman filter, Extended Kalman Filter (EKF), or particle filters. These techniques predict the system’s next position based on motion models and correct the prediction using new sensor observations. At the same time, the system builds and updates a map, typically represented as a grid or a set of landmarks. Loop closure detection is a critical function in SLAM that identifies when the device revisits a previously mapped location. It helps reduce accumulated errors and ensures the map remains consistent.

Benefits of SLAM

One of the main advantages of SLAM is that it enables autonomy in environments where GPS is unreliable or unavailable. Indoor areas, underground tunnels, dense forests, and underwater regions often lack satellite coverage. In such scenarios, SLAM provides an alternative by relying solely on onboard sensors and processing. In robotics, SLAM allows machines to move, explore, and perform tasks without human input or preloaded maps. Warehouse robots use it to optimize their routes while avoiding collisions with shelves and workers. Drones equipped with SLAM can inspect infrastructure, survey terrain, or navigate confined spaces. Mobile AR devices use it to anchor virtual content to real-world surfaces with spatial accuracy.

SLAM – Simultaneous localization and mapping also supports advancements in autonomous driving. Self-driving vehicles use SLAM to understand the road layout, track their location, and navigate dynamic environments. Real-time mapping enables the car to adapt to unexpected changes, such as road closures or obstacles. In healthcare and agriculture, SLAM helps guide robots through cluttered hospital rooms or farm fields. Underwater vehicles use SLAM to map the ocean floor or inspect submerged structures. In these applications, the ability to localize and map simultaneously provides a foundation for safe and efficient operation.

Despite its benefits, SLAM faces several technical challenges. Sensor noise, dynamic environments, and computational complexity can affect performance. SLAM algorithms must process large amounts of data in real time, often with limited onboard resources. Environments with repetitive features, poor lighting, or few landmarks can cause errors in localization or mapping. In outdoor applications, weather and terrain variations introduce additional uncertainty. Researchers continue to develop SLAM methods that address these issues using machine learning, semantic mapping, and robust data association techniques. SLAM systems are becoming more scalable, adaptable, and accurate as algorithms and hardware improve.